Terminology and Jargon

This is a short reference to terms, definitions, where relevant, how they relate to DevMate.

ISTQB

We are affiliated with the International Software Testing Qualifications Board. We aim to be consistent with their terminology wherever appropriate.

Defining Tests

Equivalence Class

“A subset of the value domain of a variable within a component or system in which all values are expected to be treated the same based on the specification.” - ISTQB

In unit testing, an equivalence class is a set of input values that are expected to produce the same output, behavior, or test outcome.

If we have a method that checks whether someone is >= 18 and <=25, then we would require the following equivalence classes.

Equivalence Class | Representative |

|---|---|

All values between 0 and 17, inclusive | Any value within this range, for example 16. 16 is then the Representative for this set of invalid values. |

18 to 25, inclusive | Any value within this range, for example 22. 22 is then the Representative for this set of valid values. |

All values greater than or equal to 26 | Any value greater than or equal to 26, for example 33. 33 is then the Representative for this second set of invalid values. |

Equivalence Class Partitioning

Equivalence Class Partitioning is the process of defining Equivalence Classes. To see how DevMate handles this, please refer to our tutorial Detailed Configuration - step by step.

Representatives

A single value that is chosen to represent an Equivalence Class.

Boundary Values

“A minimum or maximum value of an ordered equivalence partition.” - ISTQB

Errors can often occur at the boundaries of Equivalence Classes, so one should consider testing at and around those values.

Let’s say we have a method whose requirements states “We insure people between the ages of 18 and 25”.

There could be errors at the boundaries of 18 and 25. Were the requirements specific enough? Has the developer misinterpreted the requirements? Has the developer coded > rather than >=?

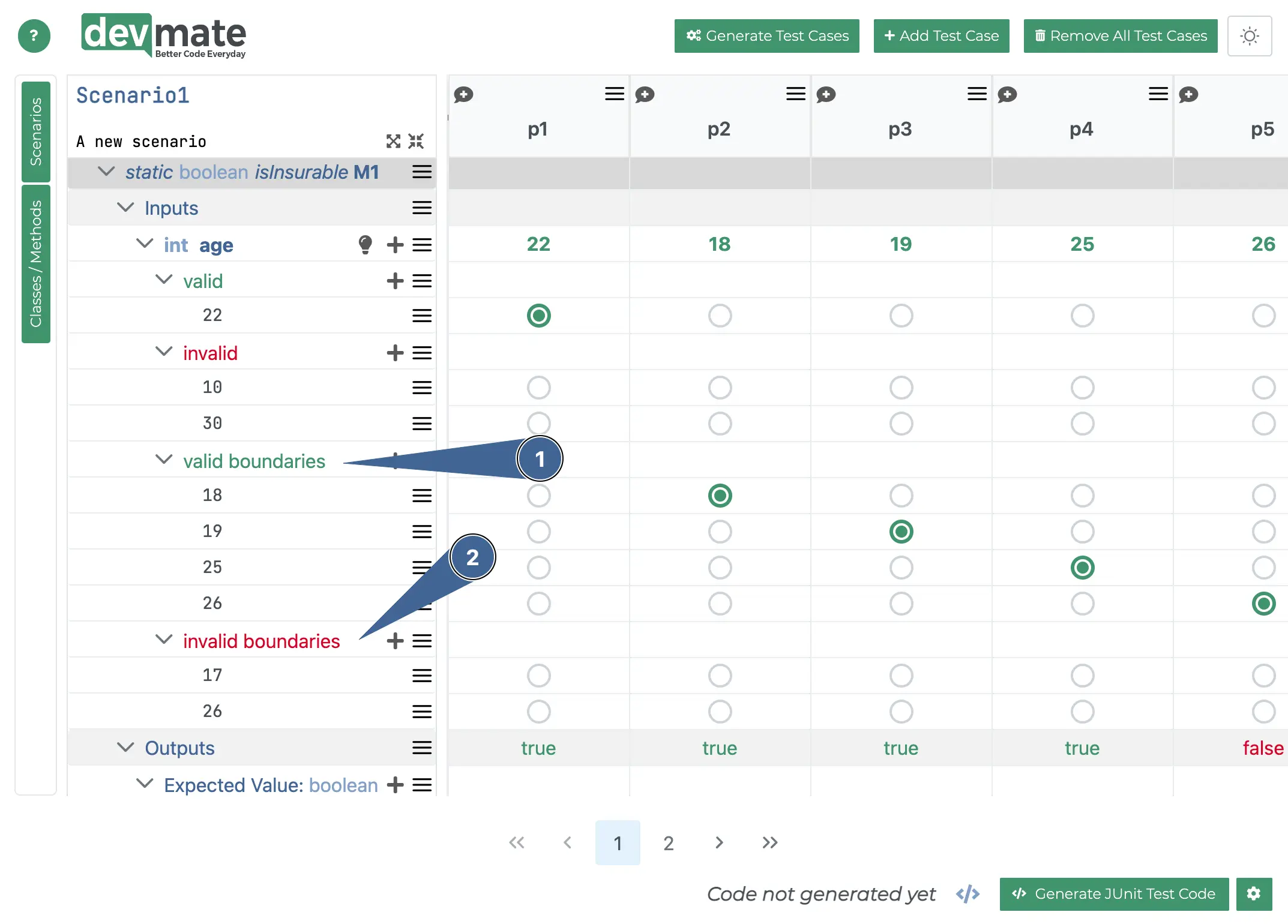

You might want to an boundary values when doing your Equivalence Class Partitioning to specifically test at and around the boundaries. The following is a screenshot of how you might define boundary values.

You can see we are going to test for valid boundary representatives and invalid ones.

Test Cases

“A set of preconditions, inputs, actions (where applicable), expected results and postconditions, developed based on test conditions.” - ISTQB

The requirements for a method or process are rarely that simple that a single test case suffices. Once you have defined your Equivalence Classes, you will generate as many test cases as are required to fully test the requirements.

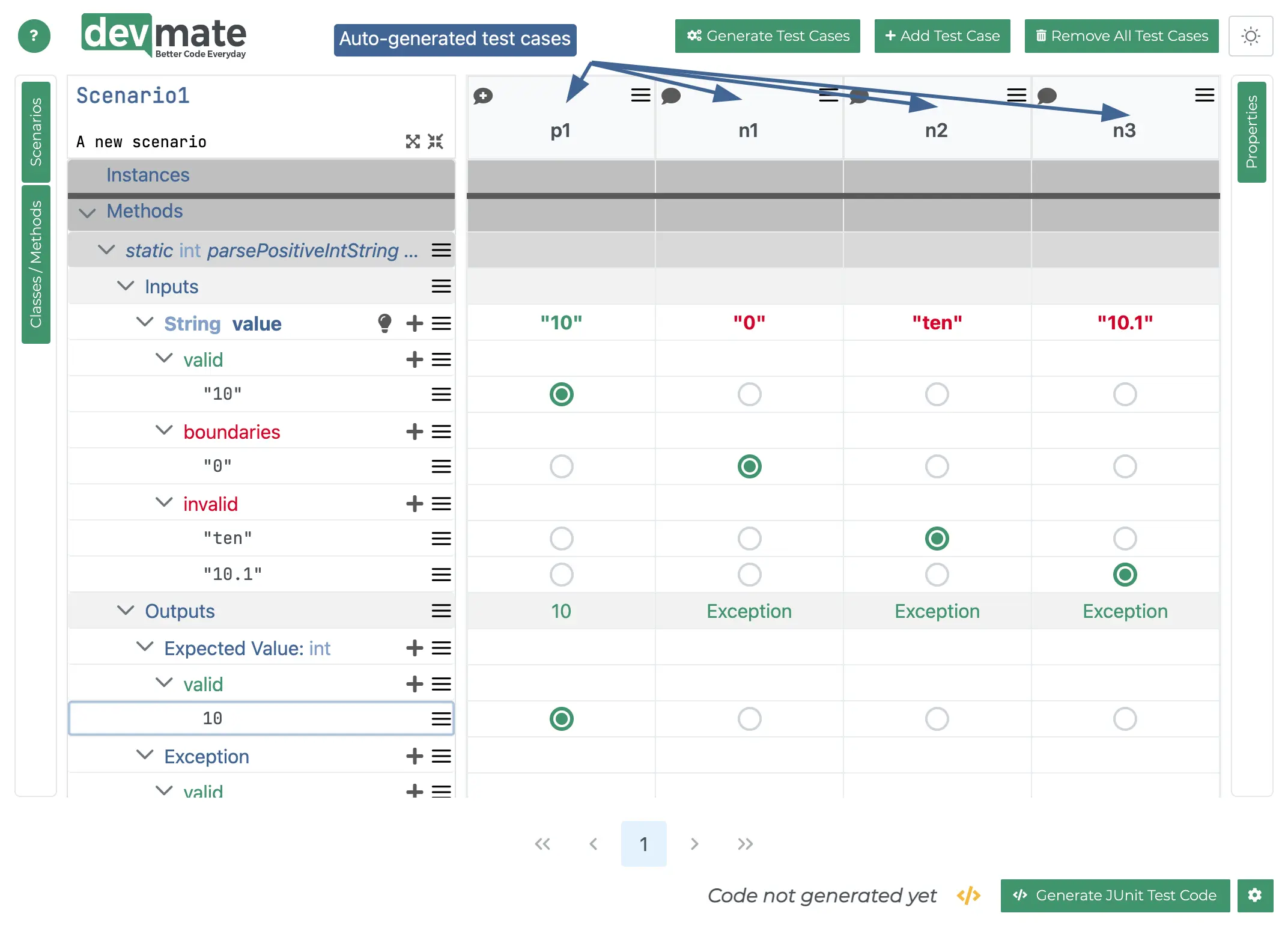

DevMate generates test cases for you automatically upon completion of your Equivalence Class Partitioning, which is a huge time saver. You can also add further test cases if you feel it’s required.

You can see how DevMate does this in the tutorial Detailed Configuration - Step by step. You can also see automatically generated test cases below. The columns p1, p2 etc. are all individual test cases.

Parameterized Test Cases

Please refer to test cases above first.

If we were to hand code a unit test for the example you see above, we might code each cases in its own block of code. Given that each test case is made up of the same structure, a tidier way to do this is to create an internal function that handles an individual test case and receives the inputs and expected output as input parameters.

This is exactly what DevMate does when you generated your test code with the single button press. For most real-world cases, which may be more complex than the above example, this can be a significant time saving. The developer now doesn’t have to write test code (or debug it!).

General terms

Black Box Testing

“A test technique based on an analysis of the specification of a component or system.” - ISTQB

A Black Box Test is derived solely from the specification/requirements, which is why black box testing is also called specification testing. It forces the tester to concentrate on what the software does, not how it does it.

Because black box testing techniques require no knowledge of the systems implementation, testing and implementation can be led and even done by non-developers.

Black Box Testing is likely a mainstay of your testing methodology. It has a lot of major advantages, whether your following TDD principles or not.

The developer can share or even shift the responsibility of requirements specification to other people such as POs, QA Engineers and Domain Experts.

If you are implementing TDD then you are black box testing by definition.

Especially using tools like DevMate, the developer can spend more time writing functional code rather than test code.

You may not necessarily get perfect code coverage (please see “Obsessed with Code Coverage?”) but you will get better quality.

You will be a lot more productive and efficient in your ability to write quality tests across an entire application.

White Box Testing

“Testing based on an analysis of the internal structure of the component or system.” - ISTQB

A White Box Test is written by a developer who has an in-depth knowledge of how the functional code is implemented. The developer then defines test cases that cover as much code as possible. White Box Testing is the antithesis to black box testing and TDD.

White box testing techniques usually focus on code coverage, taking into account statements, decisions/branches, condition testing or path testing.

In this whole process the specification/requirements do not necessarily play a role. White box testing tries to maximize the amount of code that is tested, but it doesn’t necessarily validate if the tested code meets the actual requirements definition.

Furthermore the tight coupling between code and white box tests causes tests to be fragile. Fragile tests tend to cause false positives when refactoring code.

Unit Test

“A test that focuses on individual hardware or software components.” - ISTQB

We would suggest reading Writing testable code to understand what is required to make a unit test properly testable.

A unit test is an automated test that verifies a small part of code, also called a unit, quickly and in isolation from other components such as API or database calls.

It is important to be aware of what exactly counts as a unit and how to isolate them. A unit can be a class or a collection of classes that reflect the behavior of the system, no matter how granular. By isolation, it is meant that the unit tests act independently of each other by detaching certain dependencies with stubs and mocks.

A good unit test should also follow FIRST principles.

Integration Test

“A test level that focuses on interactions between components or systems.” - ISTQB

We discussed Unit Tests above - they verify small units or components in isolation and provide fast feedback.

In the absence of speed and isolation, the next level of test is an integration test. Typically, an integration test verifies the code that glues your application together and the interaction with third party components or systems. While unit tests focus on a small unit of behavior integration tests might involve many modules of your productive code.

To effectively protect against regression and reduce maintenance costs you should mock as few third party dependencies as possible. If a call to the database is required used by your system then you should make the integration test interact with a test instance of that database. Only dependencies that are not under your control like for example a time server should be mocked.

Integration tests provide slower feedback than unit tests because setting up external dependencies consumes much more time than setting up the environment for a unit test. Additionally, more code is executed and inter-process communication might happen during an integration test.

Furthermore, the development and maintenance effort for the automatic setup of external dependencies is much higher. Since an integration test might span many modules of productive code it can become hard to track down the cause of a failure. For these reasons you should try to cover as much behavior as possible using unit tests and only resort to integration tests for verifying your system as a whole.

AAA - Arrange, Act and Assert

Arrange-Act-Assert is a great way to structure test cases. It prescribes the way you structure your test operations.

Arrange inputs and targets. Arrange steps should set up the test case. Does the test require any objects or special settings? Does it need to prep a database? Does it need to log into a web app? Handle all of these operations at the start of the test.

Act on the target behavior. Act steps should cover the main thing to be tested. This could be calling a function or method, calling a REST API, or interacting with a web page. Keep actions focused on the target behavior.

Assert expected outcomes. Act steps should elicit some sort of response. Assert steps verify the goodness or badness of that response. Sometimes, assertions are as simple as checking numeric or string values. Other times, they may require checking multiple facets of a system. Assertions will ultimately determine if the test passes or fails.

FIRST Unit Test Principles

This is a useful acronym for thinking about the properties a good unit test should have.

Fast - as in milliseconds. All your combined unit tests should run seconds otherwise you run the risk of not running them as often as you should.

Isolated/Independent

Repeatable - tests should be repeatable and deterministic, their values shouldn’t change based on being run on different environments. Each test should set up its own data and should not depend on any external factors to run its test.

Self-validating - you shouldn’t manually check manually if the test passed or failed.

Thorough - all Equivalence Classes should be carefully designed with respect to valid, invalid and boundary values; test for illegal arguments and variables; test for security and other issues; test for large values (what would a large input do their program) should try to cover every use case scenario and not just aim for 100% code coverage.

Test Doubles - Mocks, Stubs and Fakes

In automated testing it is common to use objects that look and behave like their production equivalents, but are actually simplified. This reduces complexity, allows to verify code independently from the rest of the system and sometimes it is even necessary to execute self validating tests at all. A Test Double is a generic term used for these objects.

This probably doesn’t help the novice much, so you might want to refer to this article.

Test Driven Development - TDD

“A software development technique in which the test cases are developed, and often automated, and then the software is developed incrementally to pass those test cases.“ - ISTQB

DevMate is perfectly suited for TDD as it is a Black Box Testing tool.

Shift Left

This means changing your testing approach so that tests are implemented earlier in the coding process. Referring to the Testing Pyramid, if you are relying on System/End-to-End Tests, it means doing more integration tests and, ideally, Unit Tests.

Fully implementing Test Driven Development would represent a full shift to the left and would demonstrate a true focus on quality.

Legacy Code

This term can mean all sorts of things. We all have to deal with Legacy Code but we’d really rather not!

Here are some common interpretations.

Code you’re not comfortable changing

Code without tests

Old code

Bad code

Undocumented code from the past

Here’s an excellent article that goes into Legacy Code in more detail.

Architecture

Fragile code

Code is fragile when it is easy to change and easy to break. It points to architectural weaknesses and poor coding practices. A solid (sorry) understanding of SOLID principles and Clean Architecture will result in less fragile code.

Rigid code

This refers to code that is difficult to change. It usually means that code is tightly coupled. One change causes a cascade of subsequent changes in dependent modules. This results in a fear of fixing even non-critical problems as they don’t know the impact of a change or how long it will take.

Coupling

Broadly speaking, components that depend on other components can be tightly coupled or loosely coupled. In testing, tight coupling is very bad.

We’ve written some content on Writing testable code, where we explain these concepts in more detail.

SOLID

SOLID principles are a guideline for writing clean, quality code. If you follow these principles (other other similar ones) it’s much more likely you’re a Software Engineer and not just a Coder.

It’s well beyond the scope of our documentation and we mention it for the benefit of novice programmers.

Using SOLID principles is not an overnight endeavour and so we recommend searching for online courses, videos and guides.

Clean Architecture

SOLID and Clean Architecture are brothers in arms. Where as SOLID encourages best practise for mid-level coding, Clean Architecture thinks about how to organize your code into layers.

“Clean Architecture”, as espoused by “Uncle Bob Martin”, is not the only show in town but the principles and practices it promotes are undoubtedly important.

As with SOLID, explaining Clean Architecture is beyond the scope of our documentation and we mention it for the benefit of novice programmers.